Empowering and informing consumers is central to Consumer Reports’ (CR) mission as a nonprofit member organization. It follows then, that when we build tech products and services, the way that we build them matters. We’re committing to building openly and putting the needs of our members at the center of all of our design choices.

In Part 1 of this series, we talked about a chatbot prototype we’ve been hacking on: a conversational research assistant that knows everything CR knows and provides trusted, transparent advice to consumers conducting product research. In this post, we’ll dive into how we’re thinking about LLM orchestration for this prototype.

LLM Orchestration

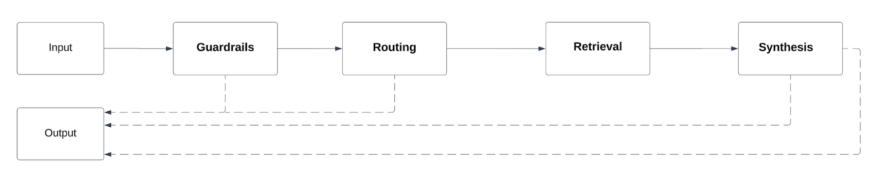

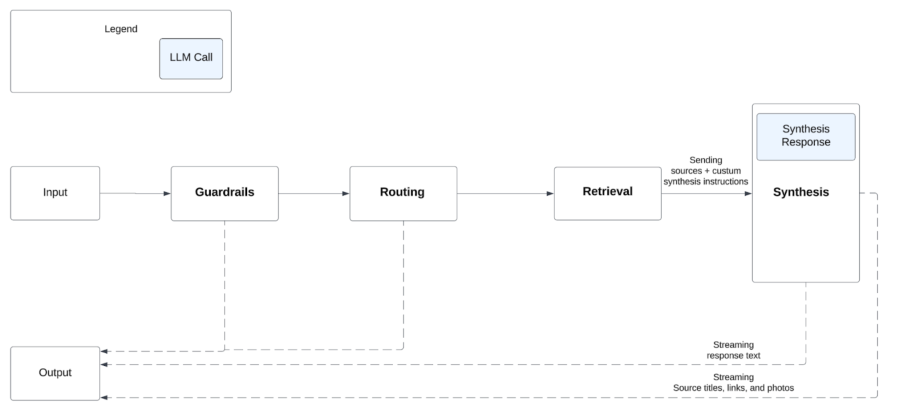

CR has been hard at work tracking the latest developments in generative AI, integrating the parts that best fit the needs of the project, and looking for opportunities to innovate. We believe that our approach to LLM orchestration, as laid out in the diagram below, will provide us with a foundation to iterate and improve upon in the future.

As shown above, the four main steps between a member’s question and our chabot’s response are guardrails, routing, retrieval, and synthesis. We’ll explain the meaning of each and our rationale for their use in the sections below.

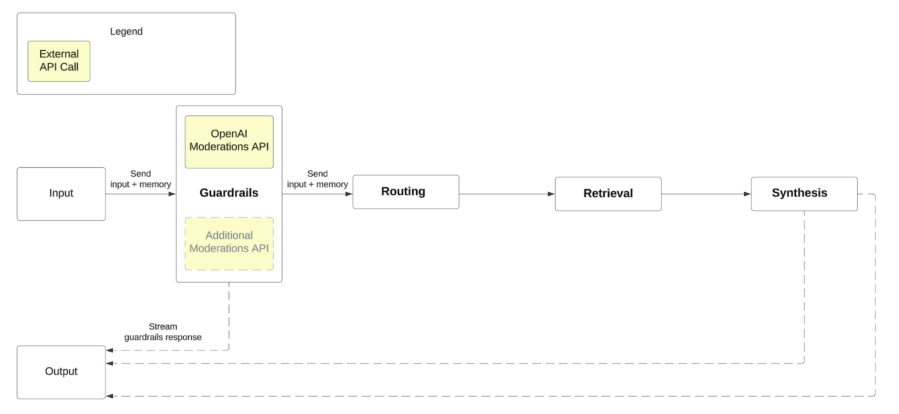

Guardrails

Input guardrails prevent problematic questions from ever reaching an LLM endpoint. We’re using OpenAI’s Moderations API, which evaluates text against a set of predefined categories, identifying potential violations containing hate speech, self-harm, sexual content, and violence. Leveraging a pretrained model like this frees us from having to train a model ourselves, and at the same time, allows us to control how we want to respond to and weight different categories of harm.

The Moderations API provides us with a baseline of safety and security, but we plan to build out our guardrails further, learning from and innovating on the work of others in the space. As we continue to build, we plan on looking into other pretrained models for detecting other forms of harmful inputs, as well as harmful outputs. If you’re aware of any that might be relevant to CR, do let us know! Shoot us an email at innovationlab@cr.consumer.org.

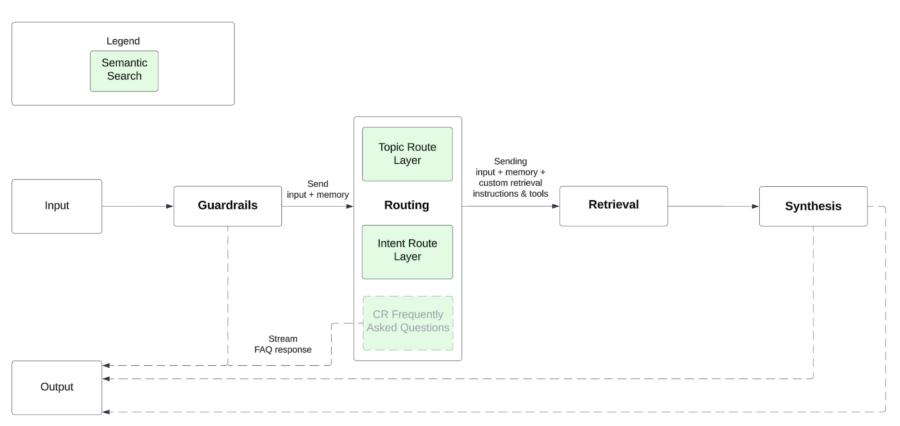

Routing

Once an input passes guardrails, we move on to the task of answering it in a way that balances latency, cost, and precision. An emerging best practice in striking that balance involves using text embeddings–the innovation at the center of LLMs–to run a semantic search matching a user’s question to a set of predefined semantic mappings. This allows us to make quick decisions about what a question is asking in order to route to an LLM with a custom set of instructions and tools. There are several libraries (Guardrails AI, NeMo Guardrails) that help do this, but we ended up choosing the Semantic Router library, developed by Aurelio AI, in an effort to take the leanest approach to routing without an LLM call.

We currently have three routers, all of which are performing with around 85-90% accuracy on our test set. The first router identifies the user’s intent, i.e. whether they’re looking for a product, looking for information about a product, or looking for information on a category of products. The second identifies the product category, i.e. whether it’s about cars, mattresses, dishwashers, etc. The third identifies whether the user is asking a FAQ that warrants a very specific canned answer. This includes questions about the chatbot’s functionality, CR’s mission, and our privacy policy, for example.

As we continue to iterate and improve our system, we’re looking for additional places to leverage text embedding search. We’ve found that this approach–in the place of an LLM call–has the potential to decrease latency and cost while increasing security. Also, with the release of GPT-4o, we’re considering the idea that the cost, latency, and precision of an LLM call may improve enough to warrant switching to it for routing. Let us know what you think!

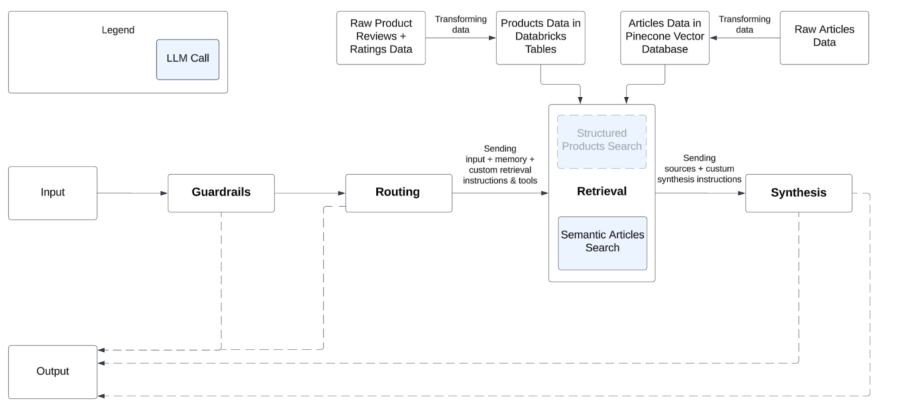

Retrieval

What makes our approach different from generalized chatbots like OpenAI’s ChatGPT or e-commerce focused chatbots like Amazon’s Rufus, is that we’re grounding it to CR’s expertise–with over 90 years of consumer research and advocacy–via a process called Retrieval Augmented Generation (RAG).

For less data-driven questions, we’ve implemented a RAG strategy that routes questions to thousands of published articles on CR’s website using semantic search. We take each article, split it into chunks, then convert each chunk into a text embedding vector and store it, along with some metadata, in a Pinecone Vector Database. When a user asks a question, we convert that question into a text embedding vector and compare it to all of the vectors in our database, picking the most (semantically) similar vectors and pulling out their chunks. We then use the chunks as sources in our response–all of which is the current industry standard approach to RAG.

For more data-driven questions, we’re developing a more structured approach to search that routes to tens of thousands of ratings and reviews on CR’s website. Instead of using semantic search, here we will be transforming the data into separate Pandas dataframes for each product category and calling its query() method to extract specific rows and columns. Prior to implementing this approach, we had experimented with using Langchain’s Pandas Dataframe agent, but found that the security risks associated with giving LLMs access to code interpreters were too high. But, since our application only needs read access to the tables (as opposed to read and write), we were able to identify a solution that constrains the LLM to writing structured queries.

For the initial release of the beta version of our chatbot, we’ll only be routing questions to our published articles, but members can expect to see the ratings and reviews data integration soon after.

Synthesis

For the final step in our approach to LLM orchestration, we route the source data returned from our retrieval step to an agent, along with custom instructions related to the question’s intent and topic. The agent has been tuned with multiple iterations of prompt engineering to respond with a tone that we feel best reflects Consumer Reports’ reputation as an organization of trusted experts. We’re encouraged by the rapid progress in the LLM space and look forward to being able to upgrade it with the most cutting edge models like GPT-4o, Llama 3, and Claude 3 Opus.

Once the agent begins generating a response, we stream the response to the user on the front-end, piece by piece. Currently, our system has an average overall latency of around 12 seconds, but streaming cuts it down to only a few seconds from the perspective of the user, since they start reading as soon as the first piece arrives. Lastly, we send links, images, and titles of the source data to the front end in the form of clickable cards so that our members can dig deeper into the product research process.

The development process is set to continue far past the initial release of our beta in June. We look forward to sharing updates with you on the process and our discoveries along the way! In the meantime, drop us a line at innovationlab@cr.consumer.org if you have any feedback on our approach to LLM application development.