Knowledge graphs are structured representations of information, typically consisting of nodes (entities) connected by labeled edges (relationships). These structured formats allow systems to understand not just individual pieces of information, but how different concepts relate to one another.

I joined Consumer Reports (CR) as an AI Knowledge Integration Consultant to enhance AskCR, CR’s expert-powered advisor which answers questions based on CR’s trusted data and research. My goal was to incorporate expert reasoning and intelligence from the 6k+ articles and buying guides created by CR’s product experts. Using this approach, we can generate answers to queries that are enriched with specialized information derived exclusively from CR’s database.

General Architecture

I began with a rough draft of the architecture that we have of our current system. The idea here for our Knowledge Graph block would be to enrich the context for smarter retrieval, which would feed into all of our other retrieval layers.

➤➤➤

➤➤➤

Steps to Mirror the “Expert Knowledge”

One of the most challenging aspects of building intelligent systems is capturing and replicating the nuanced expertise that CR’s human experts possess. When we set out to mirror their deep knowledge, we faced a fundamental question: how do you extract not just information, but the underlying reasoning and expertise embedded within a database?

The Challenge of Knowledge Extraction

Think of expert knowledge like an iceberg. The visible tip represents the facts and data points that are easy to identify and extract. However, the massive underwater portion contains the reasoning, context, and subtle connections that experts use to make informed decisions. Traditional information retrieval methods excel at grabbing the tip but often miss the crucial reasoning that lies beneath the surface.

Our database contains vast amounts of information that’s been processed using existing logic for retrieval-augmented generation (RAG). While this gives us well-structured, chunked excerpts of content, it doesn’t capture the expert-level insights and reasoning patterns.

Introducing a “Thinking and Reasoning” Layer

To bridge this gap, I developed a “Thinking and Reasoning” layer. This approach works much like having a thoughtful colleague review each piece of information and ask themselves: “What does this really mean? What are the implications? How confident am I in this interpretation?”

I leveraged GPT-4o as the reasoning engine, instructing it to process each chunked excerpt with the mindset of an expert analyst. For every piece of content, the system was tasked with identifying not just the surface-level information, but the deeper insights and reasoning patterns that a clinical research expert would naturally recognize.

Workflow Used for Curation of the Knowledge Graph

For curating the Knowledge Graph, we utilize our concept of the “Thinking and Reasoning Layer” in the following manner.

Hallucination Mitigation for the “Thinking and Reasoning” Layer

I started off trying to get the following fields out of the text:

Original fields:

- “expert_knowledge“: [“The specific advice or knowledge“, ..]

- “reasoning“: [“Why this qualifies as expert knowledge“, ..]

- “category“: [“One of the 8 categories above“, …]

- “confidence_score“: [8,..]

All fields are fairly self-explanatory except for “category.” I wanted the LLM to categorize the type of knowledge it extracted from the text. These categories included “Technical Insights,” “Performance Comparisons,” “Value Assessments,” and other general categories that knowledge from consumer product review articles could be classified into.

Extracting this information through the LLM presented challenges, particularly the issue of hallucinations from the LLM.One clear indication of this hallucination was seen in the mismatch of the lengths of the three fields: expert_knowledge, reasoning, confidence_score, and category.

Despite providing clear instructions to the LLM to extract knowledge and provide reasoning and confidence scores for each extraction, we observed a mismatch in the lengths of these four outputs from the LLM. This inconsistency indicated hallucination stemming from the input instructions. To mitigate this issue, we took several steps. First, we reduced the number of fields the LLM was required to extract to just three fields that were absolutely essential for our task.

New fields:

- “expert_knowledge“: [“The specific advice or knowledge“, ..]

- “reasoning“: [“Why this qualifies as expert knowledge“, ..]

- “confidence_score“: [8,..]

Secondly, we analyzed the average of the mode length of the three outputs, as in which length has the most repetition when scanning one single excerpt – once we had the modes for all the excerpts, we took the average of all of these.

mode length of an excerpt = mode( length(knowledge), length(reasoning), length(confidence_score) )

deciding average = average( mode lengths of all excerpts )

all of the above fields, knowledge, reasoning and confidence_score are lists

We found this average to be 3.59

Now, this gives us an indication of the number of knowledge pieces, on average we can derive from an excerpt. Taking the lower limit of this, i.e. 3, we decided to instruct the LLM to take out exactly 3 pieces of knowledge, reasoning and their confidence scores.

Reason for taking the lower limit also was an intuition that instructing LLM to go over the average of the modes would likely be trying to overdraw information that is not there.

An argument can be made about the length 3 still being too large for extracting information from excerpts that are smaller and may not contain much information. This problem gets taken care of by the confidence score. Since if the confidence scores are low, we can just instruct the LLM not to consider those pieces of knowledge as very valid. More on how the confidence score was instructed will be covered in the following sections.

Ultimately the output of this layer looked like this:

Knowledge Graph Curation Layer

Now that we have our knowledge extracted, we can work on curating the Knowledge Graphs itself. Since we were still figuring out how the information should be structured, LLM assisted curation of the KG seemed the better option.

Tech stack for the KG:

-

- LangChain

- Neo4j, for graph database.

Our primary usage of Langchain here was to use LangChain’s LLMGraphTranformer()

A Little on LLMGraphTransformer()

It’s a function that changes documents to graph-based documents. The arguments it accepts are:

- llm: the base model to be used for the KG curation

- allowed_nodes (optional): a list of nodes that are allowed to be in the KG

- allowed_relationships (optional): a list of relationships that are allowed to be in the KG

- prompt (optional): the prompt of instructions

- strict_mode (optional): Decides whether to strictly stick to allowed_nodes and allowed_relationships or not.

- node_properties (optional): a list of properties can be provided that the LLM can extract node properties from the text. If just True, then LLM can extract node properties from the text. These properties are just fields that the nodes when created will contain as kind of a metadata.

- relationship_properties (optional): same as node_properties but for relationships.

- ignore_tool_usage (optional): If set to True, the transformer will not use the language model’s native function calling capabilities to handle structured output. Defaults to False.

All the arguments with the exception of llm are optional.

The arguments allowed_nodes, allowed_relationships, prompt are the ones that directly give control on the sort of schema that would be created.

One obvious question that can be asked is with the variation in LLM passes, are the KG(s) that are created with each pass different? Well, yes, but not wildly. There may be some variation but in our experience, a lot of big points, like the most connected node, cluster patterns were largely the same across passes, given that we didn’t give any different values to the schema controlling arguments: allowed_nodes, allowed_relationships, prompt.

Now, it’s worthwhile looking at the default argument that is passed to the prompt argument in LLMGraphTransformer(), to get a hint of the nature of prompting it.

Default Prompt(s) for LLMGraphTransformer():

(default) System Prompt:

# Knowledge Graph Instructions for GPT-4

## 1. Overview

You are a top-tier algorithm designed for extracting information in structured formats to build a knowledge graph.

Try to capture as much information from the text as possible without sacrificing accuracy. Do not add any information that is not explicitly mentioned in the text.

**Nodes** represent entities and concepts.

The aim is to achieve simplicity and clarity in the knowledge graph, making it accessible for a vast audience.

## 2. Labeling Nodes

**Consistency**: Ensure you use available types for node labels.

Ensure you use basic or elementary types for node labels.

For example, when you identify an entity representing a person, always label it as **’person’**. Avoid using more specific terms like ‘mathematician’ or ‘scientist’.- **Node IDs**: Never utilize integers as node IDs. Node IDs should be names or human-readable identifiers found in the text.

**Relationships** represent connections between entities or concepts.

Ensure consistency and generality in relationship types when constructing knowledge graphs. Instead of using specific and momentary types such as ‘BECAME_PROFESSOR’, use more general and timeless relationship types like ‘PROFESSOR’. Make sure to use general and timeless relationship types!

## 3. Coreference Resolution

**Maintain Entity Consistency**: When extracting entities, it’s vital to ensure consistency.

If an entity, such as “John Doe”, is mentioned multiple times in the text but is referred to by different names or pronouns (e.g., “Joe”, “he”),always use the most complete identifier for that entity throughout the knowledge graph. In this example, use “John Doe” as the entity ID.

Remember, the knowledge graph should be coherent and easily understandable, so maintaining consistency in entity references is crucial.

## 4. Strict Compliance

Adhere to the rules strictly. Non-compliance will result in termination.

(default) User Prompt:

Tip: Make sure to answer in the correct format and do not include any explanations. Use the given format to extract information from the following input: {input}

Now that we have the basics of what an prompt to LLMGraphTransformer() looks like, we can go over some experimentation we did about how to pass in the Expert Knowledge that we collected from our articles.

Experiment 1: Just Metadata

We attached these fields in the excerpts’ metadata: allowed_nodes, allowed_relationships, prompt since LLMGraphTransformer() is believed to look at the metadata when converting documents to graph documents.

For this way of experimenting, over a limited number of excerpts, we ended with these nodes and relationships:

Nodes Summary:

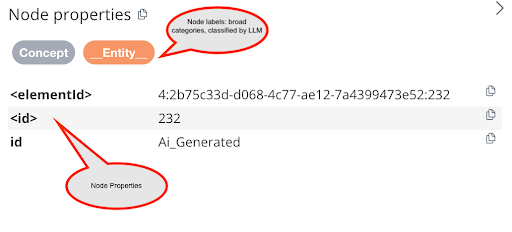

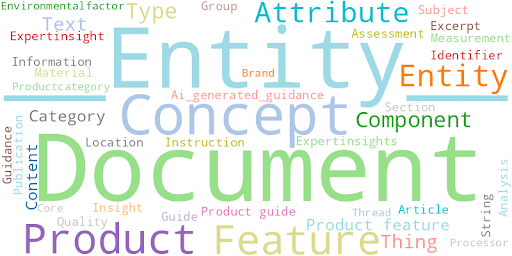

A wordcloud of the node labels with their sizes being defined by the frequency of their repetition in the KG

Relationship Summary:

A wordcloud of the relationship labels with their sizes being defined by the frequency of their repetition in the KG.

From the relationships above, we observed that the Expert knowledge relationships were still missing from the KG. A lot of the relationships made in the KG were still mostly natural language words.

Experiment 2: Enhancing Document Text with the Extracted Expert Knowledge

Another method for passing on the knowledge and reasoning of experts to the curation of KG was to enhance each excerpt with the fields of knowledge, reasoning and confidence score. This was done by creating enhanced excerpts using annotations; something like this:

### Content:

{doc.page_content}\n

### EXPERT KNOWLEDGE AND REASONING GUIDANCE (AI-Generated, Use with Caution):

1. Expert Knowledge: {doc.metadata[“expert_knowledge”][0]}

Reasoning of why this is considered Expert Knowledge

{doc.metadata[“reasoning”][0]}

Confidence Score (Out of 10): {doc.metadata[“confidence_score”][0]}\n

2. Expert Knowledge: {doc.metadata[“expert_knowledge”][1]}

Reasoning of why this is considered Expert Knowledge:

{doc.metadata[“reasoning”][1]}

Confidence Score (Out of 10): {doc.metadata[“confidence_score”][1]}\n

3. Expert Knowledge: {doc.metadata[“expert_knowledge”][2]}

Reasoning of why this is considered Expert Knowledge:

{doc.metadata[“reasoning”][2]}

Confidence Score (Out of 10): {doc.metadata[“confidence_score”][2]}\n

Nodes Summary:

Relationship Summary:

As we can see from the limited number of nodes, this method didn’t really add anything significant in mirroring the expert reasoning we were looking for.

Experiment 3: Metadata Utilized in the Prompt

In a similar vein as the last experiment, we experimented with utilizing metadata in the prompt being passed to LLMGraphTransformer().

This was done as following:

user_prompt = f”””

………….

INPUT TEXT TO ANALYZE:

[‘text’]

### EXPERT KNOWLEDGE AND REASONING GUIDANCE (AI-Generated, Use with Caution):

Below are three points. Each containing three pointers – Expert Knowledge, Reasoning and Confidence Score. Expert Knowledge is text from the excerpt itself.\

It is what has been identified as Expert Knowledge by AI and Reasoning contains the reason AI think so and the Confidence Score, the confidence it has in this finding.\

Remember everything under this is AI generated and thus should NOT be used as exact facts but instead used as indicator for what relationships should look like in the Knowledge Graph.

[‘Expert_Units’]

………….

”””

where ‘Expert_Units’ was a structured data format of all the three fields we wanted to pass, in a format we would like to pass to a prompt.

Building also on our Self-Confidence Reasoning logic, we instruct the LLM in the above to take only those pieces of knowledge into consideration that have a high corresponding confidence score.

These were the results we garner are given below.

Nodes Summary:

Relationships Summary:

Juxtaposing the Three Experiments’ Relationships Results:

Just Metadata

Enhanced Document

Metadata Utilization in Prompt

We can observe from the above results that the last prompt method with the metadata being correctly formatted and utilized works the best with a dense group of relationships and majority of them being expert sounding advice.

Final Results of the Knowledge Graph

After a few more enhancements in our instructions to the LLM for the curation, we arrive at these nodes and relationships. We made significant improvements by shifting many of the general instructions that applied to all documents from the “system prompt” to the “user prompt,” streamlining the overall design. Additionally, the issue of overly broad nodes such as Ai_generated_guidance has been largely resolved. Nodes are now being organized under clearer and more meaningful categories like Product, Brand, and Technology, resulting in a more structured and intuitive knowledge graph.

Nodes Summary:

Relationships Summary:

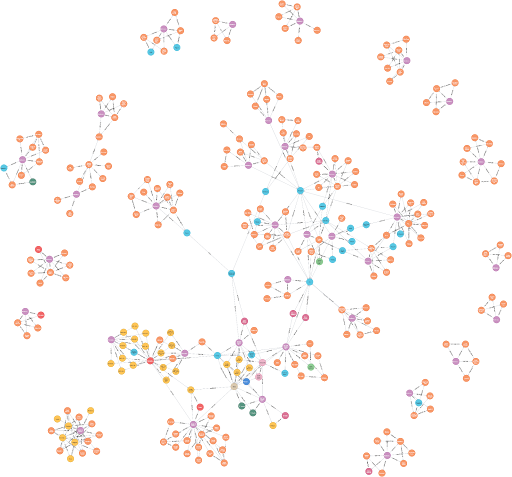

Here’s a bird-eye view of the snapshots of the final Knowledge Graph we arrive at:

One thing evident straight out the bat about this graph is that it has way more separate clusters as compared to the earlier passes at curation.

For contrast, here’s the same bird-eye view of one of the earlier curated KG(s):

Now, since we are using this Knowledge Graph as a tool for exploring the different information and structures present in our existing dataset, we can observe some interesting patterns. The first Knowledge Graph, which received more specific instructions, has created numerous meaningful delineations on its own. In contrast, the second Knowledge Graph, which was generated without specific instructions, appears to be creating spurious relationships between entities that may not be entirely logical or accurate.

With more data fed into the earlier Knowledge Graph approach, we would likely see even more refined results, though what we have here represents just a prototype working with a limited number of excerpts.

Observable Points of Tension in the Curation

Seemingly Spurious Relationships

Seems to be making relationships that are not even true in the information inside the text. Eg of this fallacy: The relationship that does not match the information in the text fallacy

Try solving the above^^ by adding the following to the system prompt:

“## 4. Extreme accuracy to the text\n”

“- You have to maintain Extreme Accuracy when deriving nodes and relationships, any entity added to the graph should be 100% accurate to the information in the text.\n\n\n”

does not totally mitigate the issue and this issue should definitely be looked on with extreme caution when curating the Graph.

Node Repetitions

There seems to be a trend of making separate clusters from the same Document.

The Sedan & Hatchback node in the following:

These need to be looked out for, since in the above example I don’t really see how making two different clusters around the same buying guide example would help the Knowledge Graph.

Natural Language over Expert Reasoning

As expected, natural language relationships are more prevalent than expert reasoning lingo.

Although these things can be mitigated through Prompt Engineering to a large degree. As you can see below, from the first set of relationships to the last.

First:

Last:

I personally feel that as we move towards more intelligent, task-specific and explainable AI systems, the ability to capture and mirror expert knowledge in structured & connected ways will decide how products learn and evolve. On a macro level, I also feel the closer this mirroring & clearer this explainability becomes the more productive AI can be for society moving forward.

If the above article resonated with your interests or work, I’d love to connect & discuss, you can find me at these spots: up2147@columbia.edu or via Linkedin.